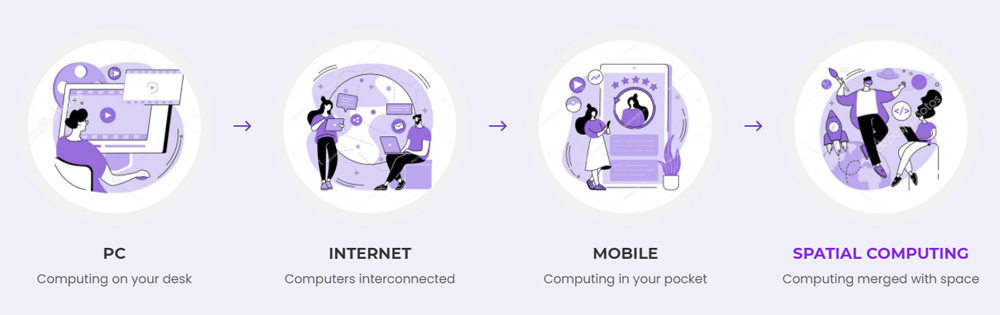

Virtual reality and augmented reality are changing the paradigm of interaction between people, data, and physical objects, making it more natural and effective. However, new technologies are required for a computer to recognize the space and objects around it and contextualize them. To enable these scenarios, the concept of Spatial Computing is being emphasized, which is poised to become the fourth revolution in computing.

Spatial Computing, the 4th computing revolution

There have been several revolutions in computing over the years. The first one was the advent of personal computers, which brought expensive and limited tools from research labs into people’s homes. This was followed by the Internet revolution, which allowed computers to be interconnected and exchange information. The next step was smartphones, which eliminated the barriers of the mouse-keyboard interface, enabling even those with limited digital skills to embrace the new world. The fourth revolution is that of Spatial Computing.

Providing a precise definition is not straightforward, as is often the case when discussing new technologies. Each person may offer a slightly different perspective. However, the basic concept is easy to understand:

Spatial Computing is the interaction with machines that have a perception of space and surrounding objects.

Spatial Computing, an umbrella term encompassing VR, AR, Mixed Reality

The roots of Spatial Computing can be traced back to the early experiments of virtual reality in the early 1990s. It was a time when the first expensive virtual reality headsets appeared on the market, a technology that soon disappeared from the public radar and resurfaced in a more dazzling form only in 2014.

So, is Spatial Computing the same as virtual reality? Not exactly.

VR is one of the many applications, certainly the most well-known to the masses, but it is not the only one and perhaps not even the most representative. Augmented Reality (AR) is also an example of Spatial Computing and one of the most promising areas. Virtual reality tends to detach users from the real world, transporting them to imaginary worlds that may not necessarily be connected to the real world we live in. On the other hand, augmented reality adds virtual elements to the real world. The various Instagram filters that add hats, dog or cat noses and ears, and other gadgets are well-known example of this technology’s application, but it is not the only one. Sygic has introduced it in their GPS-based navigation app.

These seemingly banal examples hide significant complexity. Behind a pair of fake mustaches stuck on an image, there are complex algorithms that leverage Computer Vision and Artificial Intelligence to distinguish faces from the rest of the image, identify different parts of the face, and seamlessly integrate computer-generated images with real ones captured by the camera.

Based on the examples given so far, it may seem that Spatial Computing is just a fancy feature for gamers or influencers, but there are also more serious implementations. One example is TeamViewer Pilot, an application used for remote assistance that became essential during the lockdown. Instead of having a technician come on-site for a boiler problem, with TeamViewer Pilot, you can contact support, show the experts the problem through the smartphone camera, and they can provide necessary instructions to solve the problem, highlighting the commands on the screen. It’s not just a simple annotation on a static image, but actual 3D indicators perfectly integrated into the “real world,” which remain “anchored” to the object even when you move. It can even indicate the position of the object when it’s no longer visible, perhaps because it’s covered by a door.

Hardware for spatial computing: the smartphone is just the beginning

The smartphone is an excellent entry point for augmented reality and Spatial Computing applications: it’s affordable, easy to use, and everyone has one. However, it’s not the ideal tool, especially for less playful use cases more related to business. Virtual reality headsets like Oculus or Vive probably won’t be the ideal solution either, despite being technologically advanced, as they disconnect the user from reality and immerse them in a fictional world. Instead, wearables will make the difference. While Google Glass didn’t break through (they were released too early and were too immature), Microsoft’s HoloLens 2 is gaining popularity in certain sectors, and other companies have already launched less sophisticated but equally useful glasses to support technicians and engineers in the field.

The revolution is only beginning, and in the coming years, we will witness developments that would have been unimaginable until recently. According to ReportLinker analysts, by 2025, this market will be worth over $333 billion, with a CAGR growth of 50.6%.

The evolution of Spatial Computing: remote assistance, digital twins, and applications for the business world

Currently, the most widespread Spatial Computing applications are consumer-oriented, but they will soon shift towards the business sector. As often happens, consumers act as a Trojan horse for the development of new technologies, which are then adopted in other sectors with different logic. In the next few years, we can expect an increasing number of apps related to this field that won’t rely on common smartphones but will be designed for devices more suitable for business use cases, such as Microsoft’s HoloLens 2.

Experimentation has already been underway for some time, even in Italy. For example, the new Genoa Bridge (San Giorgio Bridge) which replaced the infamous Morandi Bridge, has been equipped with a dense network of sensors for maintenance purposes. The data from these sensors will feed into the bridge’s digital twin, making maintenance easier and more effective. Workers involved in maintenance will use augmented reality devices to navigate along the structure and quickly reach areas that require work.

But it doesn’t stop there. The proliferation of robots, especially Automated Guided Vehicles (AGVs), will further drive research in Spatial Computing to make material handling operations more efficient and safe within large logistics centers such as Amazon’s, where “automation” is the keyword. This research will focus on hardware, with increasingly unobtrusive and “natural” wearable devices, but above all, it will be driven by software, which is the true engine of this revolution.

The software will indeed represent the real challenge. Today, it’s easy for a company to purchase a headset to enable AR scenarios or acquire robots. However, the complexity lies in developing customized applications that can harness their capabilities in various usage scenarios. New tools will be required to build this new generation of applications.